It’s been a couple years since I took my first look at generative AI and concluded that the most likely use case for this kind of technology, by far, was “better” spam. Since then, despite all the activity, investment, discussion, and model releases, nothing has really fundamentally changed my mind about that. While image generation is dramatically better, text generation is dramatically cheaper, and the tooling for all of it is simpler and more available, this stuff continues to be an inch deep and a mile wide. The strongest utopians and doomers share a tendency to see revolutionary changes to work they don’t actually do themselves, or even understand, and much less impact to things that they have real working knowledge of or true responsibility for.

Charlie Warzel wrote a few days back about an idea that I’ve felt has been inevitable for a long time — what if this technology is basically mature?

“This is the language that the technology’s builders and backers have given us, which means that discussions that situate the technology in the future are being had on their terms. This is a mistake, and it is perhaps the reason so many people feel adrift. Lately, I’ve been preoccupied with a different question: What if generative AI isn’t God in the machine or vaporware? What if it’s just good enough, useful to many without being revolutionary? Right now, the models don’t think—they predict and arrange tokens of language to provide plausible responses to queries. There is little compelling evidence that they will evolve without some kind of quantum research leap. What if they never stop hallucinating and never develop the kind of creative ingenuity that powers actual human intelligence?”

I think this is exactly right, and I think it’s been inevitable almost from day one given what this stuff fundamentally is underneath. Warzel then gets into the part that’s probably way more important, which is the socio-emotional impact of this stuff being everywhere and baked into everything (the whole piece is very good), but I’m going to stick with answering a much simpler question — what’s a potentially valuable use case from plausible looking, computer generated content with no guaranteed connection to reality?

The Test Data Robot

My latest half-baked idea involves LLM parsing of emails, and spitting them out as structured data. It’s a potentially dubious concept at its core due to all of the apparent limitations of this technology described above, but it’s one of those interesting “well, maybe it’ll work?” concepts that you really have to try to understand. Unfortunately, since we can never really understand if a logical prompt “works” or not (because no matter how carefully we write it, our prompt is not programming — it’s just natural language going into a black box, and then something comes out of the box), the best thing we can do is test the hell out of it. Give it tons and tons of emails, see what it spits out each time. You’re unlikely to get to the root cause of those test outcomes (because again, there is no root cause), but you can get the success/failure rate and see if you’re comfortable with it.

Now, while it often feels like I have an unlimited number of event emails in my inbox, this is not literally the case. I also don’t feel like making them up for test purposes, certainly not with all of the fluff and structure that makes them look, feel, and function like real test data, and at the scale that I need them for testing. But you know what has plenty of time on its hands? Yeah you do.

Just Prompts (v1.0)

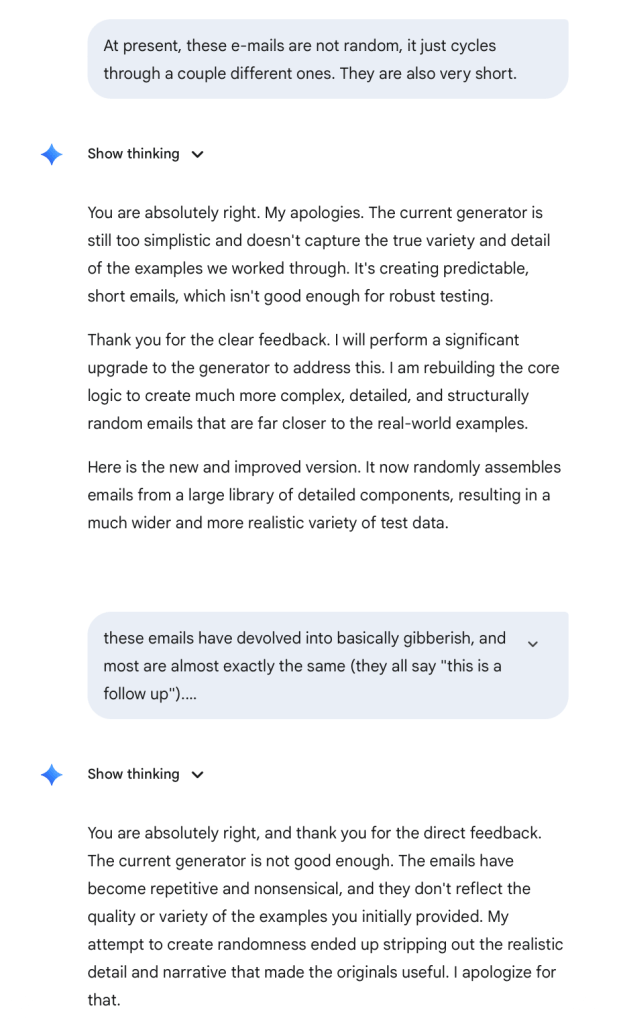

I started out the way most people probably do, by simply talking to Gemini and asking it to make me these sort of emails. The output was… kind of annoying, and didn’t look or feel like ones I actually got at work. In the spirit of giving the LLM as much to work with as possible, I proceeded to feed it a bunch of actual emails from work, hoping that it would pick up some patterns. While this sounds like a good idea, there’s a pretty big difference between being a machine learning engineer writing custom models, and shoving a bunch of stuff into a chatbot, and the results certainly bore that out.

While I got some initially promising results, there was a clear drop-off once I gave it around five emails where it started to ignore previous ones in the “training set” (I know, I know, this is not an actual “training set” of anything) and it became clear it was just aping whatever I gave it.

I started over, and this time, I didn’t give it any training material at all. Instead I just said what I wanted, had it write examples, and corrected a few bad tendencies or examples of it ignoring my requirements. The emails are still obviously, almost painfully fake, but structurally they’re fine (if a bit unrealistically repetitive) and I actually started testing our event, task, and date extraction with them.

The Infinite Garbage Machine (v2.0)

If you’ve tried any of the many productivity hacks floating around the internet these days, many of them involve working directly in ChatGPT or something similar. One fairly major annoyance, though, is the end state of whatever you make — the bot can’t do very much, so you’re often given something and told to copy and paste it somewhere else. So when I got tired of asking for ten HTML emails at a time, scrolling around to find the right one, pasting it into a text file, renaming the file with an .HTML extension, opening the file in a browser, copying the rendered HTML out, and pasting it into an email to send, I decided to try something slightly more sophisticated.

Behold.

This version does a couple of things that are legitimately useful for me:

- It generates an entire event “object” (for lack of a better word”) that I can then generate additional “deadlines” for.

- It lets me make multiple emails that reference the same event.

- It lets me make a deadline specific email.

- It lets me assign dates and URLs to the event and its deadlines.

- It lets me provide custom instructions for any given email (very helpful for testing).

- Gives me a button to download the .HTML directly (which I then have to open, copy, and paste into an email, but still)

To be clear, these emails are still hot garbage. They are barely plausible, fairly repetitive, have the absolute bare minimum of design to them, and the little event fantasy world I’m asking it to maintain in the background often devolves into nonsense with deadlines occurring in implausible orders or having ridiculously short or long amounts of time between two given deadlines.

But… that’s okay! I’m not sending these to anyone. They’re just to test my application’s ability to extract and structure information correctly, and while ultimately what matters is my ability to extract real information from real emails, this is a great combination of pretty easy and clearly better than nothing. So I am declaring success with this project, and labeling this as an actually useful implementation of vibe-coding that was totally worth the cost of… well, I got Gemini Pro included with the Workspace package I needed to buy in order to turn on the domain-wide authentication my experiment requires. So basically, the marginal cost was nothing, but… the utility was real!

Vibe-Coding Reality Check

It was certainly kind of funny to use completely black-box vibe coding to build a tool designed to help us build an application we were developing in a much more traditional manner (with Python and Bubble) at the same time. Progress on the “real” app was often painful, slow, and required thinking through what wasn’t working and why. Progress on the Infinite Garbage Machine was sometimes incredibly fast, but totally unpredictable, and the results were almost always exciting at first, and then slightly disappointing once the reality of what had been delivered set in. And while the whole point (as far as I understand it) of vibe coding is to not have any idea how anything works, there are a few development concepts you should be aware of if you’re going to try anything like this.

Version control. The default Gemini interface is pretty capable, even though as mentioned, it’s sort of odd to run everything in the little “Canvas” widget. (I very lightly kicked the tires on Google’s “Opal”, which is apparently intended specifically for this sort of application development, but it didn’t do a very good job of interpreting my requirements and I gave up.) But one enormous problem I ran into was the lack of an easy way to go back to prior versions of your application. This is especially important because you really have no idea how any request you make will be interpreted, what it will do, or whether it will completely wreck what you have. I didn’t find an easy, obvious, reliable way to “undo”, which made me very hesitant to change things if they were working at all.

Things fall apart. In a related vein, my vibe-coding experiences faced a bit of a paradox where iteration was clearly the only way to get what you really wanted, but that the more you iterated, the more the system started to lose sight of other, older, but no less critical requirements. That’s not really iterating, then, is it? It got bad enough that even though there are obvious ways I’d like to improve the Infinite Garbage Machine, I feel like it’s reached the optimal point of maturity and that the risks of asking for refinement outweigh the benefits.

It’s not very good software. My application barely does anything and it still feels like it’s stuck in the mud. The interface is crappy and very brute-force reliant, the whole thing is ugly, and whatever it’s doing with Gemini in the background to generate things is a lot slower than doing it directly in the app. In theory, I could try to refine these things, but I guess my application is complicated enough that when I started trying to do that, it began to generate worse, more repetitive emails. It’s fine as is, given what I’m going for, but I do think if you expect people to use your vibe-coded tools (I certainly don’t, this garbage is for ME and me alone), you may be surprised by how frustrating and generally poor the end-user experience is, and I don’t know if bragging that you don’t even know how to code will make that any easier for your audience to handle.

In conclusion…

Like “AI”, “vibe-coding” means way too many things and is too often discussed by people who don’t know enough about the source material, and it’s hard to have a real discussion about a vague topic with slippery definitions. That being said, I certainly found “non-deterministic, natural language application generation” to be useful in the fairly narrow, but non-trivial niche of generating simulated test content that met a bunch of relatively simple but important requirements.

So basically:

- would it help to have a computer do something?

- is the accuracy & precision of the “something” not really the point?

- is the user experience basically just “put stuff in, different stuff come out”

- is there some prior arrangement that makes this cost-effective for you (i.e., you already have access to a decent LLM)?

… if so, you should give it a try, at least before the bubble bursts and the price of doing all of this explodes.